g. Set up a Job Definition

In this step, you set up a template used for your jobs, known as a job definition. A job definition is not required, but a good practice to use so that you can version how your jobs are launched. For more information about job definitions, see Job Definitions.

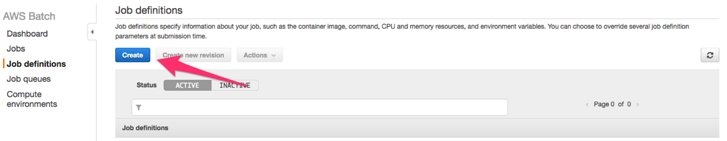

- In the AWS Batch Dashboard, choose Job definitions, then Create.

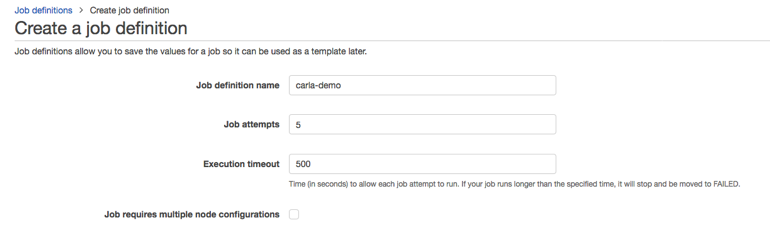

- Type a Job definition name.

- For Job attempts, type 5. This option specifies the number of attempts before declaring a job as failed.

- For Execution timeout, type 500. This option specifies the time between attempts in seconds.

- Skip to the Environment section.

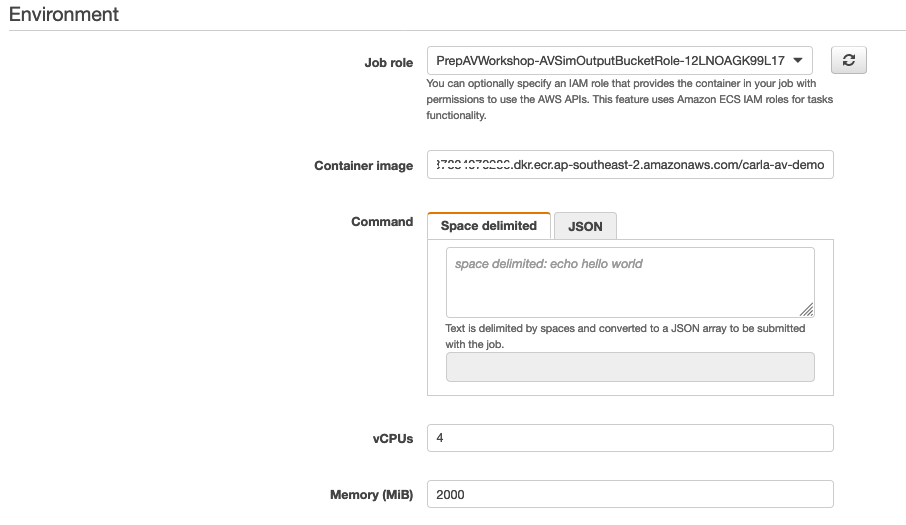

- For Job role, choose the role previously defined for ECS tasks to access the output S3 bucket on your behalf. If you do not know the name of the job role, use the following command in your terminal.

echo "ECS Job Role: $(aws cloudformation describe-stacks --stack-name PrepAVWorkshop --output text --query 'Stacks[0].Outputs[?OutputKey == `ECSTaskPolicytoS3`].OutputValue')"

- For Container image, choose the repositoryUri generated when you created the ECR repository. If you do not know the URI, use the following commmand in your terminal.

aws ecr describe-repositories --repository-names carla-av-demo --output text --query 'repositories[0].[repositoryUri]'

- For vCPUs use 4 and set Memory (MiB) to 2000.

- Skip to the Environment variables section.

- Choose Add. This environmental variable will tell the application running in your container where to export data.

- For Key, type EXPORT_S3_BUCKET_URL. For Value, choose the bucket you previously created. To find the name of the S3 bucket, run the following command in your terminal or view the S3 Dashboard in your account.

echo "S3 Output Bucket: $(aws cloudformation describe-stacks --stack-name PrepAVWorkshop --output text --query 'Stacks[0].Outputs[?OutputKey == `OutputBucket`].OutputValue')"

12. Choose Create.

12. Choose Create.

Next, take a closer look at the compute environment, job queue, and job definition you created.